IT Audit, Cloud Cybersecurity, Governance Risk and Compliance (GRC)

- Description

- Curriculum

- Reviews

Citadel Cloud Management provides an in-depth curriculum centered around the Cloud Control Matrix (CCM) created by the Cloud Security Alliance (CSA). This curriculum is aimed at helping enterprises develop and refine their cloud security strategies by leveraging the CCM framework to assess and manage risks associated with cloud computing providers.

Curriculum Content:

- Introduction to the Cloud Control Matrix (CCM):

- Overview of the CCM framework and its purpose in cloud security.

- Explanation of how CCM helps in defining security requirements and assessing cloud providers.

Understanding the relationship of CCM with other industry-accepted security standards and frameworks,

such as ISO 27001/27002, NIST, PCI-DSS, and more.

See references: https://cloudsecurityalliance.org/blog/2020/10/16/what-is-the-cloud-controls-matrix-ccm and https://cpl.thalesgroup.com/faq/data-security-cloud/what-cloud-security-alliance

Detailed Domain Coverage:

- Application & Interface Security: Principles governing application security, data integrity, and customer access requirements.

- Audit Assurance & Compliance: Processes for audit planning, independent audits, and mapping to regulations and standards.

- Business Continuity Management & Operational Resilience: Strategies for business continuity planning, testing, and maintenance.

- Change Control & Configuration Management: Handling changes, acquiring new applications or data, and managing development and quality testing.

- Data Security & Information Lifecycle Management: Best practices for managing data flow, inventory, and lifecycle.

- Data Center Security: Physical security controls, asset management, and access control for data centers.

- Encryption & Key Management: Policies for key management, encryption, and protecting sensitive data.

- Governance & Risk Management: Risk assessments, policy enforcement, and oversight in managing data-focused risks.

- Human Resources Security: Governance of employee-related security aspects, including termination, mobile device management, and training.

- Identity & Access Management: Credential management, segregation of duties, and access restrictions.

- Infrastructure & Virtualization Security: Intrusion detection, vulnerability management, and OS hardening.

- Interoperability & Portability: Use of APIs, data requests, and ensuring portability between services.

- Mobile Security: Management of mobile devices, anti-malware practices, and app store policies.

- Security Incident Management, Cloud Forensics & E-Discovery: Incident reporting, response management, and legal preparation.

- Supply Chain Management, Accountability & Transparency: Controls related to data quality, incident reporting, and supply chain metrics.

- Threat & Vulnerability Management: Managing antivirus, patch management, and addressing vulnerabilities.

Mapping to Standards and Frameworks:

- CCM v4 is mapped to various standards such as ISO/IEC 27001/27002/27017/27018, CIS Controls V8, and others.

- CCM v3.0.1 mappings include standards like NIST SP 800-53, PCI DSS, and ISACA COBIT.

- Understanding how fulfilling CCM controls can help meet requirements of multiple standards and regulations simultaneously.

Application and Implementation:

- Practical application of the CCM framework to develop a cloud security strategy.

- Using the CCM spreadsheet to align cloud security controls with multiple frameworks and simplify compliance.

-

1Principles of Cybersecurity: Framework and Security FundamentalsText lesson

Cybersecurity is a multi-layered and complex field that involves the protection of computer systems, networks, and data from unauthorized access, attacks, and damage. Understanding its principles, frameworks, and security fundamentals is critical to protecting organizations from cyber threats. Below is an elaboration of the Principles of Cybersecurity, a general Cybersecurity Framework, and key Security Fundamentals.

-

2Layers of CybersecurityText lesson

Cybersecurity is a multi-layered approach that aims to protect data, networks, and systems from various threats. Each layer works together to create a defense-in-depth strategy, ensuring that even if one layer is breached, others will continue to provide protection. Below is an elaboration on the layers of cybersecurity, their key components, implementation steps, and relevant resources.

-

3Cloud Control Matrix : Security DomainsText lesson

The Cloud Controls Matrix (CCM) is a baseline set of security controls created by the Cloud Security Alliance to help enterprises assess the risk associated with a cloud computing provider. The Cloud Controls Matrix (CCM) is a framework created by the Cloud Security Alliance (CSA), designed to assist organizations in assessing the security and risk posture of their cloud providers. The CCM provides a comprehensive set of security controls that cover various domains and security requirements related to cloud environments, offering enterprises a method to assess the security measures and compliance status of cloud providers.

-

4Cloud Security, Risk Assessment and Evaluation conrolsText lesson

This set of modules aims to provide a comprehensive guide to cloud security, risk management, and evaluating cloud service providers (CSPs). These modules will walk you through the basics of cloud computing and security, the process of conducting risk assessments, and evaluating potential CSPs, including how to use frameworks like the Cloud Controls Matrix (CCM) in procurement.

-

5Governance, Risk, and Compliance (GRC)Text lesson

Overview:

Governance, Risk, and Compliance (GRC) is an integrated approach to ensuring that an organization is able to meet its business objectives while managing risks and adhering to relevant regulations and standards. GRC frameworks help organizations stay on top of their responsibilities, improve decision-making, and minimize potential operational disruptions.

The three key pillars of GRC are:

- Governance: Ensuring that an organization’s leadership and management practices align with its strategic goals, fostering transparency, accountability, and integrity.

- Risk Management: Identifying, assessing, and mitigating risks that could impact an organization’s ability to achieve its objectives.

- Compliance: Ensuring adherence to laws, regulations, and internal policies relevant to the organization’s industry and business operations.

-

6Internal AuditText lesson

An IT Audit evaluates the effectiveness of an organization’s information technology systems, processes, and controls. The goal is to ensure the systems are secure, efficient, compliant with applicable laws, and optimized for performance. An audit encompasses a variety of methodologies, standards, and tools, which are essential for detecting vulnerabilities, ensuring compliance with regulations, and improving IT governance.

Learning Objectives:

- Understand the role and importance of IT audits: Learn how audits protect organizational assets, ensure compliance, and enhance operational efficiency.

- Learn key components of an IT audit: Understand the audit process from planning to reporting, covering areas like security, performance, and compliance.

- Develop auditing techniques and methodologies: Gain a deep understanding of auditing principles, risk-based approaches, and data collection methods.

- Gain hands-on experience with IT audit tools: Familiarize with popular audit tools, like Nessus and ACL, used in assessing IT systems.

-

7SOC (System and Organizational Controls) Compliance OverviewText lesson

SOC(System and Organizational controls) compliance refers to a type of certification in which a service organization has completed a third-party audit that demonstrates that it has certain controls in place. Generally, this refers to SOC 1, SOC 2, or SOC 3 compliance; however, SOC for Cybersecurity and SOC for Supply Chain certifications exist.

-

8SOC 1 Compliance – Financial Reporting ControlsText lesson

-

9SOC 2 and Cloud (Compliance – Data Security, Availability, and Privacy)Text lesson

-

10SOC 3 Compliance – Public Assurance ReportsText lesson

-

11SOX Compliance – Financial Reporting and IT ControlsText lesson

-

12Sarbanes–Oxley Act (SOX) Compliance with FinTechText lesson

This curriculum will guide learners through the Sarbanes–Oxley Act (SOX), its significance in financial reporting, compliance measures, and the impact on FinTech companies. It will cover SOX’s 11 titles, providing a detailed exploration of each section with practical implementation steps, particularly focusing on how these regulations apply in the FinTech industry.

Recent Posts

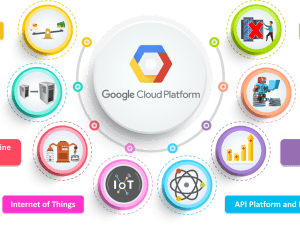

- Google Cloud Platform’s Latest Innovations: Transforming the Future of AI and Cloud Computing

- Exploring Azure’s Cutting-Edge Services in 2025: A Step Towards a Smarter Future

- What Businesses Need to Know About Quantum Computing and Big Data

- The Future of Edge AI and Its Impact on Cloud SaaS Solutions

- AI, Big Data, and Cloud SaaS: A Triple Threat for Business Growth

Recent Comments

Archives

Categories

ADDRESS

Houston, Texas USA

US LINE

+1 (346) 652-4970

NIGERIA LINE

081 2852 0152

MAIL ADDRESS

info@citadelcloudmanagement.com

QUICK LINKS

- © 2025. All Rights Reserved By Citadel Cloud Management